- Project title

AI Yoga Learning Assistant

- Group name

Tech Cat Dream

- Sheridan program name

Computer Systems Technology - Software Development and Network Engineering

- Release Date

12/08/2020

- Project Description

The AI Yoga Learning Assistant is a web platform to learn yoga in a fun way using advanced ML pose detection technology. Made for anyone with a laptop and webcam, your yoga poses can be estimated with feedback provided in real-time. Your webcam video stream is displayed side-by-side with a lesson video.

The platform analyzes your webcam frames and detects 17 body joints in 2D space and compares it to the reference pose in time lesson video. Real-time feedback enables you to improve your posture and take your yoga practice to the next level.

The technologies we have chosen are: -PoseNet on TensorFlow.js. Tensorflow is a JavaScript library developed by Google that allows you to run machine learning models directly in the web browser. Posenet is a pre-trained model that detects the position of key body joints on a 2D graph. -React.js for our front-end interface -Node.js and Express.js for our backend API to process uploaded videos by pre-detect poses and store them -MongoDB for our NoSQL database to store video details.

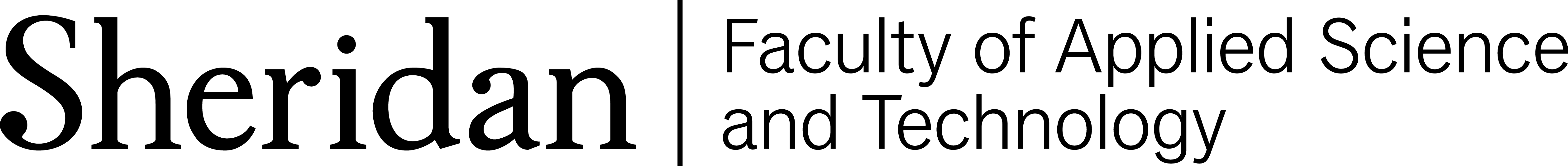

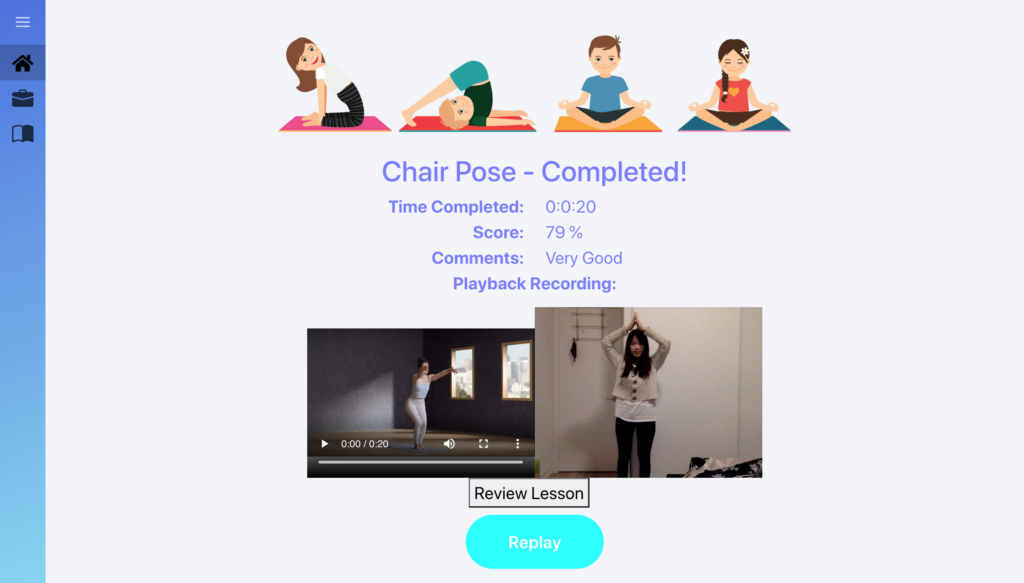

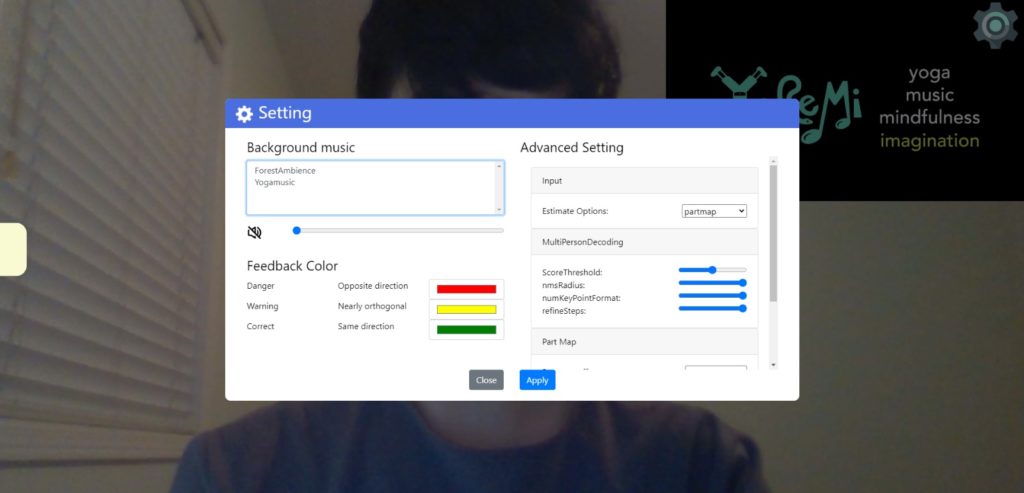

At present, the features we have developed include the instructor's ability to upload new lesson videos. Our platform pre-detects poses on any custom lesson videos. While students follow the lesson video, their real-time body limb positions are scaled and compared on time with the lesson video. The lesson experience is also enhanced by face filter stickers, ability to add background music, receive performance scores, and review playback of your practice.

Future directions include gesture recognition to replace the in lesson menu controls, voice feedback to cure body adjustments, and multiplayer mode to detect multiple players.

- Project background/history

We found this project interesting among all projects that were listed from industry partners. During the project planning phase, our client suggested to use Kinect (a motion sensing input device to detect poses), but came up with the idea to use a Machine Learning library that runs on web browsers (Tensorflow.js).

We divided tasks to each member (managed by the Project Manager) and had meetings every week to discuss all possible solutions for the project. We always tried to find new ideas and discuss on what would be the best solution to meet our client's requirements and ensure low cost (our client is a non-profit organization. Every week, we had meetings with our Capstone professor to show what we had done, then get their feedback and suggestions to improve the project.

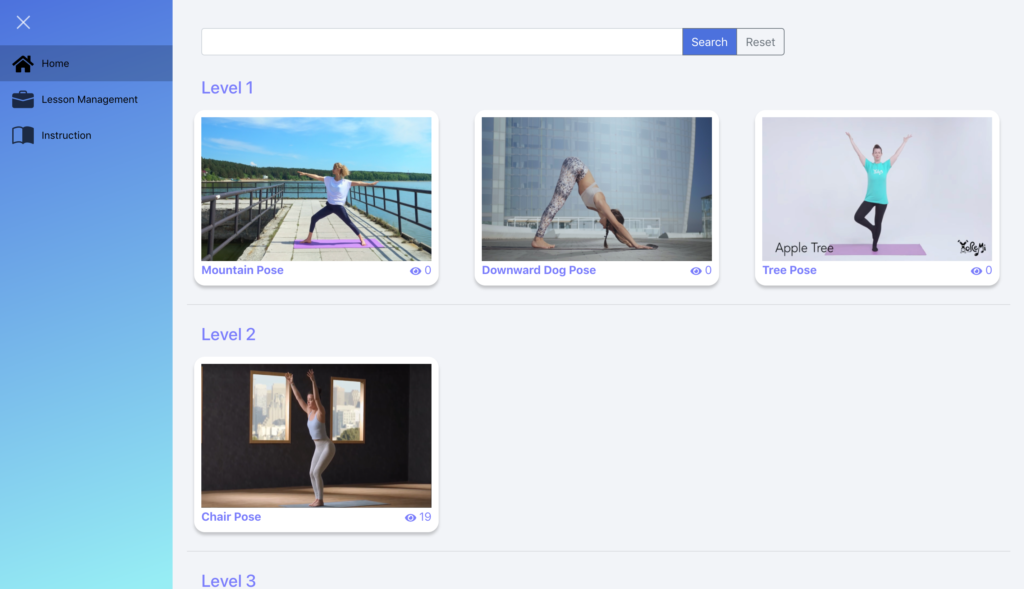

- Project features

- Add new lesson videos to database (pre-detect poses from the whole video)

- Delete or edit lesson videos (description, title, level)

- Search lesson videos by name

- Detect poses in real time

- Provide pose feedback in real time

- Review performance after finish each attempt

- Display stickers for engagement

- Configure game play by modifying game settings

- Additional information

Our team of programmers love to explore computer vision technology. With new javascript libraries that are making ML more approachable, our team has taken on a unique project. We have collaborated together to help people learn yoga in an interactive way. Our project manager has a background in research for a motion capture studio. We also have team members with co-op experience in full-stack and front-end development in banking and in government. We aim to create solutions that are maintainable and have a user-centered focus.

May 1 2021